Microsoft has recently announced new features for videos, which has been used by companies for a long time for detecting fakes among news, agencies, politicians, and meme-makers. The feature is called DeepFakes and Microsoft has now optimized it to detect AI-powered bogus videos manipulated to look realistic. The new tools have been launched just before the US presidential elections to make sure that any manipulated video related to political campaigns and media organizations could easily be detected.

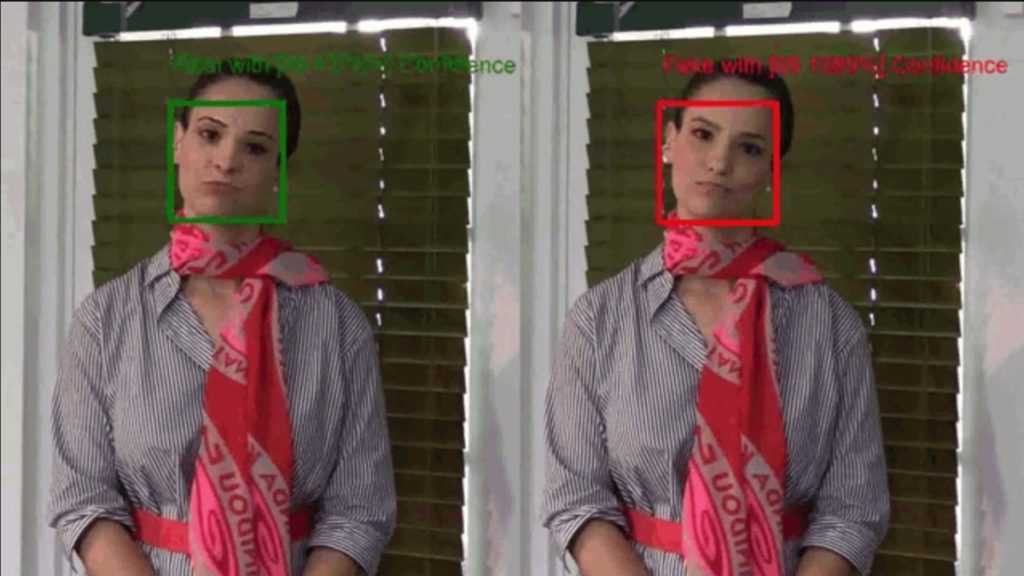

The tool would be called Microsoft Video Authenticator, which is the detection tool for Microsoft. It will analyze videos frame-by-frame and will give a confidence score to show if the video is real or modified. Microsoft has been able to create such an algorithm with the help of Microsoft’s Responsible AI team and the Microsoft AI, Ethics, and Effects in Engineering and Research (AETHER) Committee. It uses features like publicly available datasets such as Face Forensic and was tested on the DeepFake Detection Challenge Dataset. Microsoft will also be launching Microsoft’s Azure cloud infrastructure tool, which allows the creators to sign a piece of content with a certificate.

There will also be a reading tool to certify that content is authentic or not. Currently, Microsoft is working on AI Foundation’s Reality Defender 2020 so that the electrical organizations could get the tool. It will also be partnering up with BBC and New York Times to detect any manipulated media during the elections. These kinds of tools could be a perfect initiative to detect fake news and media roaming on the internet.